About Me

AI Developer and Data Scientist with a Physics Background

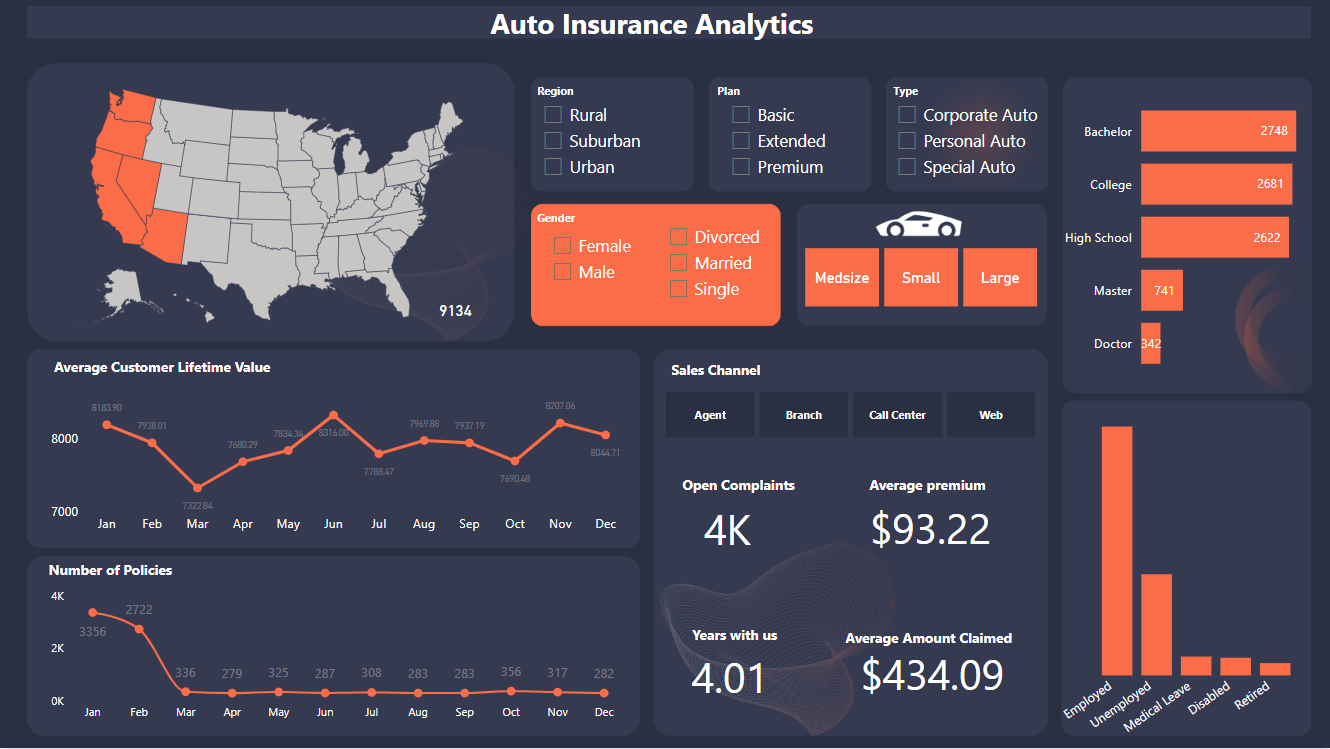

I'm an AI Developer specializing in multi-agent AI systems, LLM optimization, and enterprise-grade infrastructure. I build intelligent systems that solve complex problems at scale.

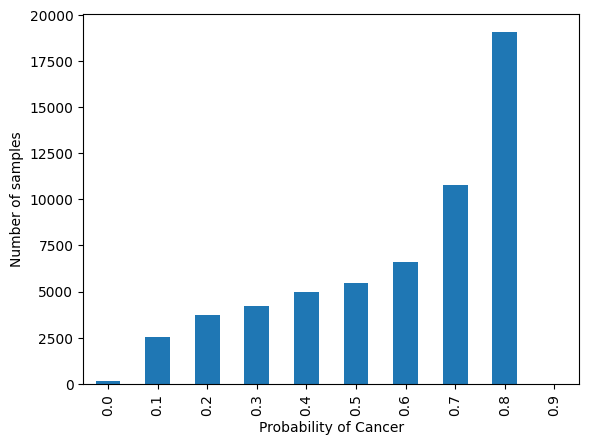

My journey from theoretical physics to cutting-edge AI development has equipped me with a unique perspective on problem-solving. At UMBC, I discovered the transformative power of data during my undergraduate internship analyzing El-Niño Southern Oscillations at CUSAT.

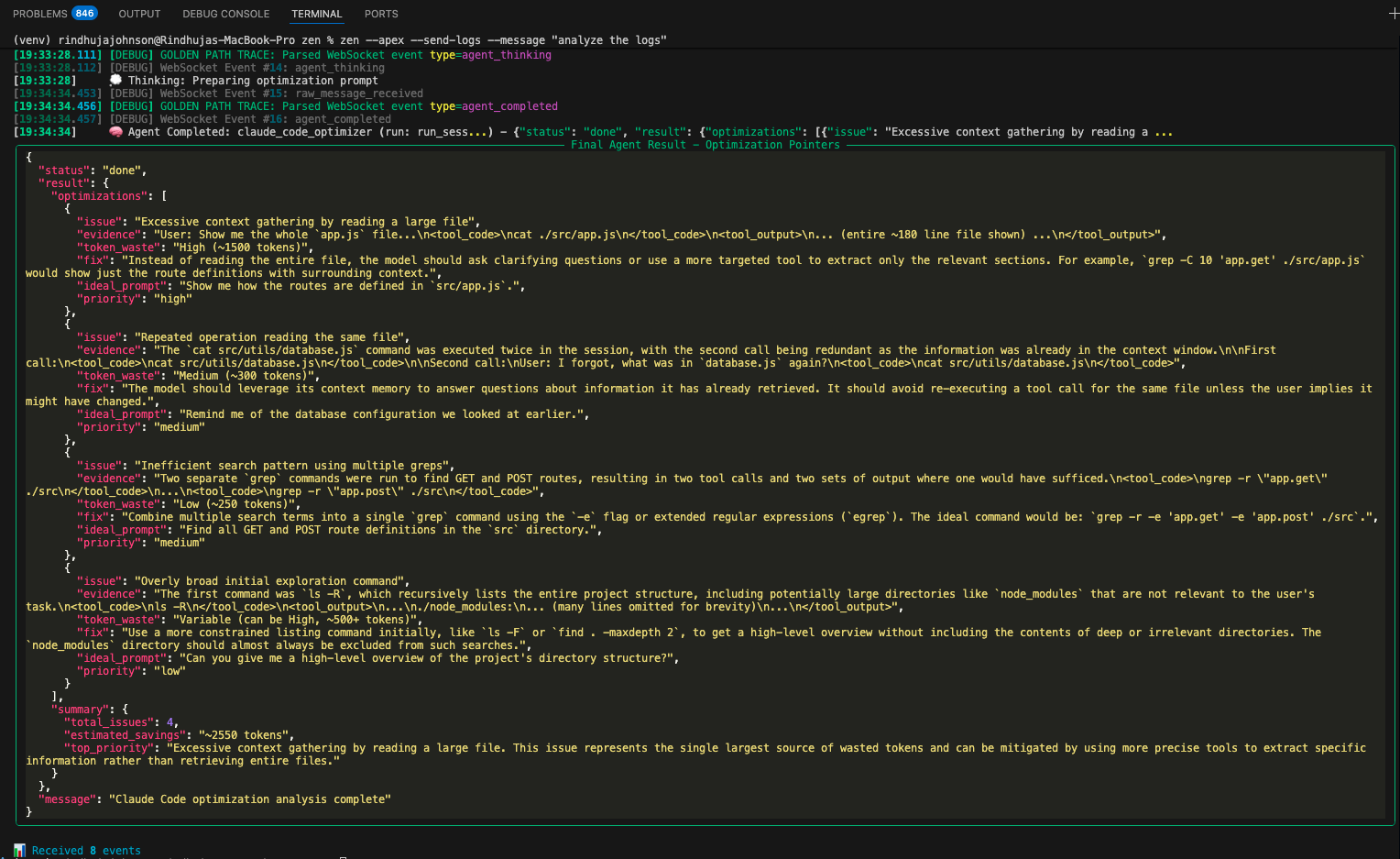

I architect production AI systems with proven results: 7-10K token savings per session, 25-30% improvements in AI accuracy, and enterprise-scale cloud deployments. I work with cutting-edge technologies including Claude, GPT-4, Gemini, FastAPI, and GCP infrastructure to deliver measurable business value.